Survey researchers love taking surveys. It helps us stay on top of the latest survey trends and gives us an idea of what everyday survey creators do right—and wrong—when designing surveys.

One of the most common problems we see is that many people don’t use skip logic.

Skip logic is a survey design feature that lets you send a respondent to a later page of your survey or a specific question on a later page in your survey. It means you can design personalized surveys where respondents answer only the questions that apply to them.

This might sound like more of a nice-to-have feature than a necessary one, but the truth is that not using skip logic could be putting your data in jeopardy.

We did an experiment to find out whether the data you get from a survey that uses skip logic is better compared to one that doesn’t. Using SurveyMonkey Audience, we sent two surveys that used the same 10 questions about our favorite TV show: Game of Thrones.

One survey used skip logic and the other used some of the most common workarounds we see survey creators use to avoid skip logic. Here’s what we found:

Ignoring skip logic can damage your response data

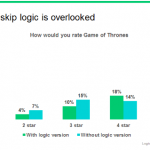

How would you rate Game of Thrones? At first glance, there doesn’t seem to be anything wrong with the question below. It’s simple and to-the-point, but it only works in context. What if the respondent hasn’t watched the show?

Errors like this can have a huge effect on your data. When we asked this question in the survey that used skip logic, it received an average rating of 4.15 stars. The version without skip logic received an average of 2.98 stars.

What was the difference? In the skip logic version, we first asked respondents if they’d seen the show and asked only the people who answered “yes” to rate it.

Why was there such a big difference? The version that avoided skip logic had a big increase in one-star ratings, possibly because respondents who hadn’t seen the show didn’t have an answer option that applied to them and gave a frustrated response.

The inaccurate data that results from omitting skip-logic can push the survey creator to the wrong conclusions. In our example, the data indicates that Game of Thrones is merely mildly popular, when in fact it’s wildly popular.

Ready to use skip logic?

Skip logic helps you ask the right question at the right time. Choose a paid plan to access the feature.

The most common workarounds don’t work, either

People sometimes come up with some pretty creative methods to avoid using skip logic, adjusting the actual question or answer options in order to fit a wider audience. These solutions aren’t just inelegant; they’re potentially harmful to your data.

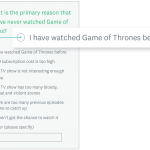

The answer option workaround: In the example below, we didn’t use skip logic to hide this question from people who had watched Game of Thrones before. Instead, we showed it to everyone, expecting the viewers who had watched it would select, “I have watched Game of Thrones before.”

This seemingly straightforward workaround actually ended up leading a lot of our respondents astray. Nearly half—43%—of respondents chose a reason why they hadn’t watched Game of Thrones, even though they had said in a previous question that they had seen the show.

Was it a mistake? Or were they frustrated that the survey failed to account for their previous response? It’s difficult to say, but we’re certain that their responses to this question are inaccurate and add noise to the response data.

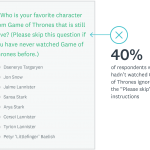

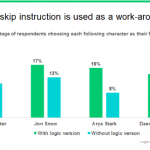

The question instruction workaround: Another frequently used tactic is to add instructions to the question that tells respondents to skip the question if it doesn’t apply to them.

In the example below, the respondents who haven’t watched Game of Thrones were instructed to skip the question.

Did it work? Not exactly. Roughly 2 out of 5 respondents who had previously said they hadn’t watched Game of Thrones answered the question, and our findings were weakened as a result. The top-picked characters matched those in the survey that used skip logic but at much smaller percentages, possibly because non-viewers were likely to select characters at random.

Skip logic improves the survey-taking experience

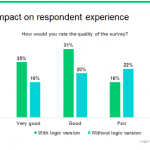

At the end of both versions, we asked the respondent how they would rate the quality of the survey. The percentage of respondents who rated the survey as “poor” or “fair” was much higher than in the survey that used skip logic.

In addition, the completion rate—the percentage of respondents who completed the whole survey—was lower in the survey that didn’t use skip logic. Furthermore, 14% fewer respondents said they’d be willing to take another survey in the future. This suggests that the negative impact from avoiding skip logic could extend beyond a single survey.

From looking at a simple 10-question survey, we’ve seen that skip logic can influence a lot. It can affect the quality of your survey responses, the number of responses that come in, and even the respondent’s experience.

Given our findings, it’s worthwhile to dedicate the time to set up your skip logic—and test it out to make sure that it’s programmed correctly. You’ll be rewarded with more accurate survey responses and happier respondents.

If you have questions about how to make skip logic work for you, we’ve got answers.