When creating questions for your survey, you may be tempted to put them in matrix grids. After all, they’re faster and easier to create, and studies have found that respondents fill them out more quickly. The problem, however, is that rather than signaling respondents to think carefully about each question, matrix grids make respondents complete surveys haphazardly.

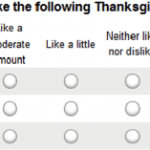

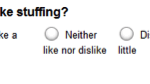

If you look carefully at most matrix grids, though, you’ll notice that the questions are asked slightly differently than separated questions. Compare the matrix grid below with the separated questions that follow:

Imagine you’re filling out the grid, which asks, “How much do you like or dislike following Thanksgiving dishes?” You’ll probably think immediately about Thanksgiving, and answer the questions accordingly. With the questions on the right, however, there’s no mention of the topic of the questions. You’ll answer them individually, rather than mentally connecting them all to Thanksgiving. Thinking about the questions in various ways will result in different responses. For instance, if you generally don’t like stuffing, but you consider it a once-a-year, Thanksgiving essential, you’ll probably rate it higher in the above matrix grid than in the separated questions. How can we be sure, then, that it’s the layout of matrix grids that is affecting responses rather than the way the questions are asked?

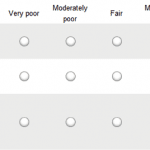

Here at SurveyMonkey, we put this to the test. Respondents were randomly assigned to two groups. We asked respondents identical questions in the same way; the only difference was whether the questions were put into matrix grids (many questions per page) or separated (one question per page). Here’s the question matrix:

And, here’s an example of a few of those separated questions to give you an idea of what this looked like:

Even though the questions were asked identically, we found that the two groups responded differently.

The responses of the two groups had different means, or averages. For example, when asked, “How good or bad is the progress of the U.S. led effort to rebuild Iraq?”, the mean for the matrix group was 3.49 (on a scale from 1 to 7), while the mean for the separated questions group was 3.68. This difference was significant at the .001 level.

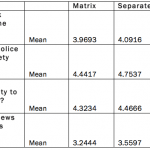

Here are some of the other mean differences, all statistically significant:

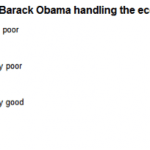

We also found that the matrix grid questions produced more variance than separated questions. Variance measures how spread out responses are. If respondents are filling out a grid without paying much attention, maybe randomly clicking on buttons, we’d expect their responses to have larger variance. This is exactly what we found. When we asked respondents, “How well is Barack Obama handling the quality of schools in America?”, the variance for the matrix group was 2.789, while the variance of the separated questions group was 2.269. This difference was significant at the .001 level.

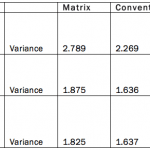

Here are some other differences in variance:

So there are differences between the groups. But how do we know if those differences are good or bad? Greater variance can be bad, but perhaps for the topics we measured, people’s opinions are spread out. Also, the means are different between the two groups, but maybe the matrix grid means are different because they’re more precise. How do we know matrix grids don’t actually produce better data?

We can’t directly ask respondents how accurate their responses were. Psychological research has found that we don’t know our own thought processes very well. Even if we did, though, if we filled out a matrix grid quickly without giving it too much thought, we probably wouldn’t admit that to the researcher. Our way around this was to ask respondents questions that have proven to be related to individual measures such as political party and political ideology. The closer responses are to those measures, the better our survey has done in tapping people’s true opinions. We found that responses to matrix grids resulted in a lower R-squared value (.497) than responses to separated questions (.544). This means that the responses to separated questions were more closely related to the measures, so the questionnaire with separated questions was a more accurate, better measure of respondents’ beliefs.

In essence, we’ve found that matrix grids produce different, less precise responses than separated questions, even if the questions are asked in the same exact way. There’s good news and bad news. The bad news is that, unfortunately, asking good questions isn’t the only thing you have to worry about. It turns out that the layout of the survey also matters. The good news is that in your next survey, you can avoid the trap of matrix grids, as enticing as they may be, and get better data. And better data is what we all want, isn’t it? Besides stuffing, that is.

P.S. Special thanks goes out to Annabell Suh, our fantastic methodology intern who co-wrote this blog post.