How much time would you be willing to spend completing a customer satisfaction survey about a recent shopping experience? On a survey distributed by your Human Resources team regarding employee satisfaction? From your friends who hosted an event that you attended and want your feedback?

Understanding your audience when constructing a survey is important and can help inform decisions on survey length and content granularity. Since survey respondents have different motivations for responding to surveys, their tolerance for how long a survey is will vary.

How long will your survey take?

Our AI-powered tool, SurveyMonkey Genius, can estimate your survey's completion time. Just preview your survey to see what it is.

What we analyzed:

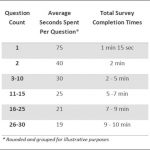

We wanted to understand how the length of surveys–as measured by number of questions–impacts the time respondents spend completing surveys. In order to understand this relationship, we took a random sample of roughly 100,000 surveys that were 1-30 questions in length, and analyzed the median amount of time that respondents took to complete the surveys.

In ideal circumstances and over a large, randomized sample of responses, the average time it takes to answer a question should not vary based on the length of the survey, so a linear relationship between the number of questions in a survey and the time it takes to complete a survey should exist.

What we learned:

It may come as no surprise, however, that the relationship between the number of questions in a survey and the time spent answering each question is not linear. The more questions you ask, the less time your respondents spend, on average, answering each question. When your respondents, in methodological terms, begin “satisficing”—or “speeding” through a survey—the quality and reliability of your data can suffer. On average, we discovered that respondents take just over a minute to answer the first question in a survey (including the time spent reading any survey introductions) and spend about 5 minutes in total, answering a 10 question survey. However, respondents take more time per question when responding to shorter surveys compared to longer surveys:

Can we always assume that longer surveys contain less thorough answers? Not always, since it depends on the type of survey, the audience, and the relationship of respondents to surveyor, among other factors. However, data shows that the longer a survey is, the less time respondents spend answering each question. For surveys longer than 30 questions, the average amount of time respondents spend on each question is nearly half of that compared to on surveys with less than 30 questions.

In addition to the decreased time spent answering each question as surveys grew in length, we saw survey abandon rates increase for surveys that took more than 7-8 minutes to complete; with completion rates dropping anywhere from 5% to 20%. The tolerance for lengthier surveys was greater for surveys that were work or school related and decreased when they were customer related.

What this means for you:

Take survey completion time into consideration as you design your next survey. Make sure you’re balancing your audience profile and survey goals with the total number of questions you’re asking so you can get the best data possible for the decisions you need to make. And if you do write a survey that has a low response rate, make sure you send it to enough recipients to get a good sample size.