There’s no question that your survey responses are a valuable tool.

The insights you get from them can help you make your next business decision or learn more about the people you’re most interested in.

But did you know there are patterns in your survey responses that can help you spot problems with the way you designed your survey? That means studying your survey responses to see what went wrong can help you make better surveys in the future.

We’ll show you how to connect the dots between responses and survey errors. That way, you’ll learn to create surveys that better engage respondents and lead to more valuable responses for your team.

Did your skip logic make sense?

The misuse of skip logic may be the most common error in survey design.

Skip logic is a feature that “skips” a respondent to a specific question or page based on how they answer a question.

At its best, skip logic allows you to create a more personalized survey experience that delivers in-depth insights for your team. At its worst, it traps or confuses respondents, frustrating them and creating biases in your results.

For example, if the respondent tells you they’re vegan but are then skipped to a question that asks which type of meat they enjoy eating most, they’re more likely to leave your survey.

To spot when skip logic is used ineffectively, check for the following:

1. A spike in respondents who leave your survey after completing a question that uses skip logic

It’s easy to check for drop-offs using SurveyMonkey Analyze. In the Question Summaries section of your survey, you can find the number of respondents who answered a question and the number who skipped it.

A note about terminology here: In Analyze, a “skip” means a respondent simply didn’t answer a question. It’s unrelated to skip logic, the feature we mentioned earlier that helps survey creators make better surveys. Got it? OK, then let’s continue.

A “skip” in Analyze can signal one of two things. It either means a respondent skipped the question and continued with the survey or they quit the survey entirely. When the number of skips on a question increases and never recovers, it means people are dropping out of your survey.

You should always expect some people to drop out of your survey, but if too many people are dropping out—especially on a question that follows a skip logic question—it’s probably worthwhile to take a look.

2. Receiving nonsensical results

Let’s revisit our vegan-meat example. What happens if your respondents say that they enjoy a particular type of meat, even though they mentioned being vegan earlier in the survey?

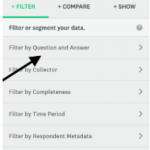

You can use Filters in Analyze to spot results that don’t make sense. Here’s how:

- Select Filter by Question and Answer from the Filter menu on the left of your screen.

- Select a question that uses skip logic and choose a test answer choice.

- Based on your filter, check if the responses to your follow-up questions seem reasonable.

For example, you might filter by respondents who answered that they were vegan on the skip logic question and look for meat-related answers later in the survey. If you spot a lot of meat-related answers, you may have a problem with your skip logic.

It’s important to mention here that there can be other reasons why respondents drop out of your survey. If you’re curious, we actually asked respondents what’s most likely to cause them to leave a survey.

Did your questions bias the results?

Questions that have one answer option as a clear winner are often the most valuable and interesting kind. But if an answer option is radically or suspiciously dominant, it may be a sign that there’s bias in your question wording.

We’ve written an entire guide for spotting and avoiding common mistakes in your question wording but here are some of the basics:

- Is there any judgment or opinion in your question prompt? If there is, it may be a leading question

- Are the answer choices or question prompt leaning a certain direction? Unbalanced questions can influence respondents.

- Is the question in an agree/disagree format? Acquiescence bias may be at play.

If you’ve checked the wording of your question and none of these issues have popped up, there’s probably nothing wrong with your question. It just identified something that people share a popular opinion about.

Was your survey overwhelming?

Every respondent has a limit to their

patience. A long survey tests that patience, pushing the respondent to either leave the survey or engage in satisficing. Satisficing is when respondents begin choosing answers that don’t reflect their actual opinions in order to get through the survey faster.

To spot the respondents’ breaking point and locate when they leave, look at the number of responses on every question. When the number takes a significant nosedive and fails to recover, it’s safe to assume that many have left.

Spotting a satisficer isn’t as easy. But looking out for the following signs can help:

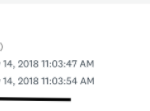

- Suspicious differences in the average time spent on your survey. More specifically, it either takes the respondent a very long time to complete or nearly no time at all.

You can view response times by visiting Individual Responses in SurveyMonkey Analyze. Each respondent will have an associated length of time that they spent on your survey.

- A respondent selects the same answer option for every question—otherwise known as “straightlining.”

To spot this pattern for a given respondent, look at their responses throughout the survey in the Individual Responses tab. If, for example, they chose answer option C for every question, their responses may be questionable.

- The respondent skips open-ended questions or answers them with a few words.

It’s easy to check for skips on an open-ended question using the techniques we’ve already gone over in this article. But deciding whether a given response is “good” or not is ultimately up to you.

The number of skips on open-ended questions is, more often than not, relatively high. This isn’t a coincidence. They’re among the most taxing types of questions for respondents. So whenever possible, avoid using more than 2 open-ended questions in your survey.

The presence of the patterns we’ve described in your survey results don’t guarantee that there’s a problem with your survey. However, they can be useful as a way to diagnose potential issues that merit a closer look.

Ready to master survey design? Start by reading our free eGuide, Writing survey questions like a pro. It’s got everything you need to start creating focused, flawless surveys today.